Bug #6394

closedSudden increase in capture.kernel_drops and tcp.pkt_on_wrong_thread after upgrading to 6.0.14

Description

We noticed that on some machines, metrics for capture.kernel_drops and tcp.pkt_on_wrong_thread started increasing immediately after upgrading to Suricata 6.0.14 from Suricata 6.0.13. Downgrading to 6.0.13 solved the issue right away.

Here's our capture configuration:

af-packet:

- interface: S5

cluster-id: 96

cluster-type: cluster_qm

threads: 22

defrag: yes

use-mmap: yes

mmap-locked: yes

tpacket-v3: yes

rollover: no

use-emergency-flush: yes

ring-size: 200000

block-size: 4194304

bpf-filter: "not host X.X.X.X and not host X.X.X.X"

We disabled the

bpf-filter to confirm the issue is unrelated to its use. Disabling it made no difference.

This is on a Debian bullseye machine with Intel X710 and X722 NICs.

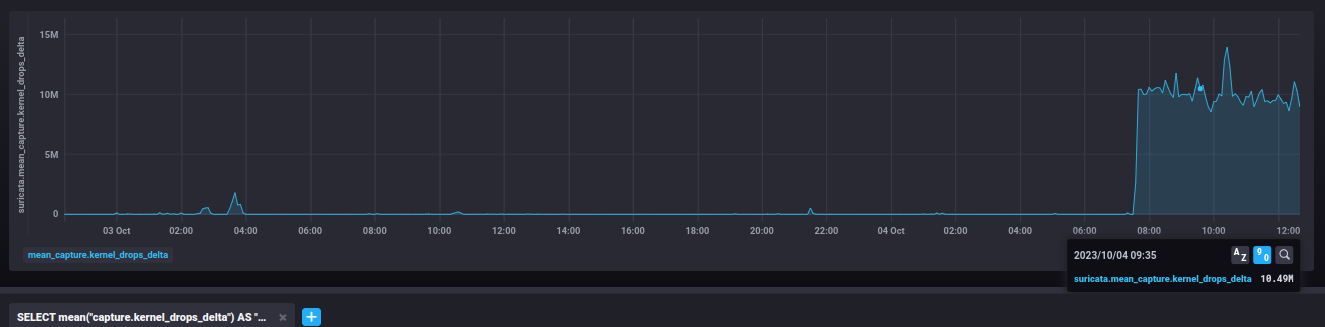

The graphs show the increase in the counters after the upgrade at 2023-10-04 ~7:30 UTC with no other configuration change.

Files

Updated by Victor Julien over 1 year ago

This is unexpected. Are you able to git bisect the changes between tags suricata-6.0.13..suricata-6.0.14 and see which commit introduces the issue?

Updated by Sascha Steinbiss over 1 year ago

Victor Julien wrote in #note-1:

Are you able to git bisect the changes between tags suricata-6.0.13..suricata-6.0.14 and see which commit introduces the issue?

Yeah, the packaging and deployment overhead makes this a bit tiresome, but I think there is no way around it. Will keep you updated.

Updated by Sascha Steinbiss over 1 year ago

Well, this took a while... after bisecting between 6.0.13 and 6.0.14, make dist-gzip and building Debian packages for each revision, and deploying them on the affected machines, we could in fact isolate the following commit as introducing the issue:

$ git bisect bad

ce67172bbf0f4c37ca59ee8fbd5e24369ec15f1a is the first bad commit

commit ce67172bbf0f4c37ca59ee8fbd5e24369ec15f1a

Author: Philippe Antoine <pantoine@oisf.net>

Date: Fri Jun 30 10:45:37 2023 +0200

decode: fix offset for DCE layer

Fixes: 136d351e403b ("decode: single network layer entrypoint")

Ticket: #3637

(cherry picked from commit fdf9cdf40068f00147bb07dd3f41a7556b8de823)

src/decode.h | 3 ++-

1 file changed, 2 insertions(+), 1 deletion(-)

In fact, at least one affected machine sees a Cisco Fabric Path layer in its traffic.

Updated by Sascha Steinbiss over 1 year ago

All machines affected with rising capture.kernel_drop and tcp.pkt_on_wrong_thread counters indeed see Cisco Fabric Path/DCE traffic. It is likely that there is an issue with that particular change.

We also observe another, probably related, issue on one machine where the same Suricata version that triggers above effect also triggers a rising decoder.invalid counter, but no increase in drops or packets on wrong thread. This machine does not regularly see Cisco Fabric Path/DCE traffic.

One of my initial concerns was that the fix in above commit actually made the DCE traffic processable by Suricata for the first time and the drops were just caused by hardware exhaustion due to suddenly higher inspection load. But we did not observe any rise in CPU load at a level that would saturate the machine's processing power.

Updated by Sascha Steinbiss over 1 year ago

Maybe it is also worth revisiting https://redmine.openinfosecfoundation.org/issues/3637 which caused this change

Updated by Victor Julien over 1 year ago

- Related to Optimization #3637: Performance impact of Cisco Fabricpath added

Updated by Victor Julien over 1 year ago

Are you able to extract some of the traffic to see if Suricata is processing the pcap correctly?

Updated by Philippe Antoine over 1 year ago

Maybe due to a 4 byte footer of DCE layer ?

Pcap definitely welcome

Updated by Sascha Steinbiss over 1 year ago

I'll find out if I can share a pcap.

Updated by Sascha Steinbiss over 1 year ago

Just to let you know that I won't be able to share full pcaps, but. I can isolate some packets with pseudonymized IPs and without PII or customer information. Would that be enough to test out the DCE parsing?

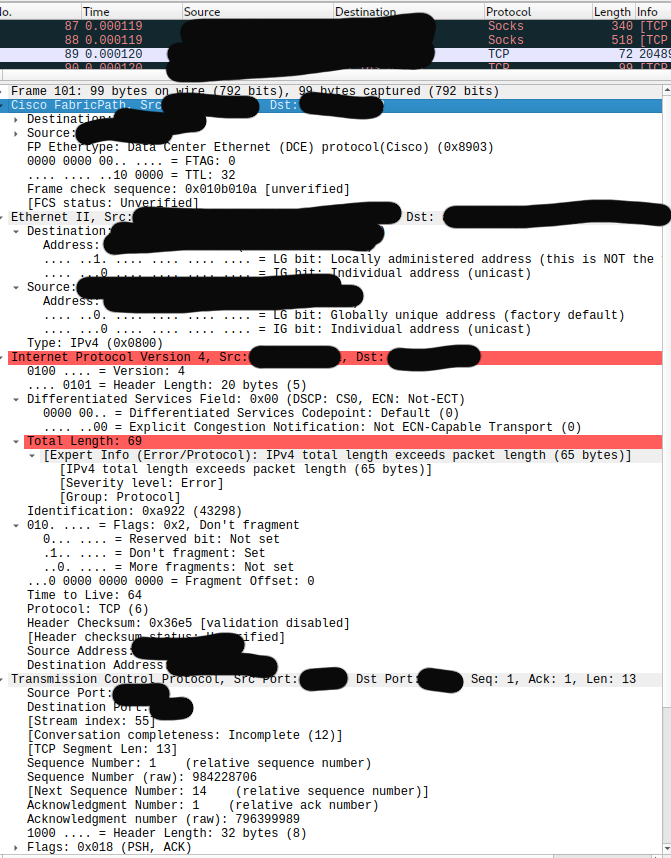

Looking at some of the packets in Wireshark I can see that there is indeed a footer present.

Updated by Sascha Steinbiss over 1 year ago

- File ciscows.png ciscows.png added

I haven't had much success providing you with data I can actually share, sorry. Anonymization tools TraceWrangler and pktanon either remove or modify the Cisco layer so the result looks a lot different from any original pcap. It looks like there's no actual traffic I can give out without a NDA.

I noticed though that some packets are apparently present twice in the pcap, once with and without FabricPath. Not sure if that's a problem. Also, I noticed that Wireshark lists the FabricPath packets as malformed due to some disagreement about packet length, most likely due to the 4-byte footer. See this redacted screenshot:

However, it also does this for the public test file: https://github.com/bro/bro/raw/master/testing/btest/Traces/cisco-fabric-path.pcap -- so if that's an issue, this file might already help.

Is there anything you'd have me do to debug locally? Any hunches that I might be able to help confirm or disprove?

Updated by Philippe Antoine over 1 year ago

@Sascha Steinbiss could you try a patched Suricata which handles better the footer ?

https://github.com/catenacyber/suricata/tree/decode-cisco-fabricpath-6394-v2

Updated by Philippe Antoine over 1 year ago

- Status changed from New to Assigned

- Assignee changed from OISF Dev to Philippe Antoine

- Target version changed from TBD to 7.0.3

Updated by Philippe Antoine over 1 year ago

- Related to Task #6443: Suricon 2023 brainstorm added

Updated by Sascha Steinbiss over 1 year ago

Philippe Antoine wrote in #note-12:

@Sascha Steinbiss could you try a patched Suricata which handles better the footer ?

https://github.com/catenacyber/suricata/tree/decode-cisco-fabricpath-6394-v2

I've prepared an internal package with that patch and am waiting for feedback. Thanks!

Updated by Sascha Steinbiss over 1 year ago

Switching to that patch changed the situation in a way that now the package drops (capture.kernel_drop) do not start increasing, but instead the decoder.invalid value is increasing where before it did not. See graph in the attachment.

No changes in the tcp.pkt_on_wrong_thread as far as I can see.

FYI, same behaviour seen with 7.0.2 so likely not 6.x backport-specific.

Updated by Philippe Antoine over 1 year ago

So, looks better.

Can you isolate where `decoder.invalid` comes from ? (anomaly events ?)

Updated by Philippe Antoine over 1 year ago

- Status changed from Assigned to In Review

Updated by Philippe Antoine over 1 year ago

However, it also does this for the public test file: https://github.com/bro/bro/raw/master/testing/btest/Traces/cisco-fabric-path.pcap -- so if that's an issue, this file might already help.

Wireshark finds these packets are malformed and this pcap looks crafted indeed...

Updated by Sascha Steinbiss over 1 year ago

Philippe Antoine wrote in #note-17:

So, looks better.

Not sure, are packets that are "invalid" inspected? Not really an improvement for us if drops are replaced by invalid packets if they are both not used for detection...

Can you isolate where `decoder.invalid` comes from ? (anomaly events ?)

We very briefly ran a separate EVE output file with anomaly type enabled only. Lots of `decoder.ipv4.trunc_pkt` with some `stream.pkt_invalid_timestamp` sprinkled in between:

ssteinbiss@xxxxxx:/tmp$ jq .anomaly.event < anomaly.yaml | sort | uniq -c |sort -rn

14016566 "decoder.ipv4.trunc_pkt"

543623 "stream.pkt_invalid_timestamp"

44016 "stream.3whs_synack_with_wrong_ack"

36474 "stream.wrong_thread"

24182 "stream.fin_but_no_session"

9258 "stream.rst_but_no_session"

8195 "stream.rst_invalid_ack"

8195 "stream.pkt_invalid_ack"

1919 "decoder.ipv6.trunc_pkt"

325 "stream.pkt_broken_ack"

32 "decoder.ipv4.opt_pad_required"

On the former, we didn't see any IP layer information, as might be expected:

{"timestamp":"2023-11-15T13:35:07.319793+0000","in_iface":"S5","event_type":"anomaly","src_ip":"","src_port":0,"dest_ip":"","dest_port":0,"proto":"","anomaly":{"type":"decode","event":"decoder.ipv4.trunc_pkt"}}

while on the others there was IP and TCP/UDP/ICMP information:

{"timestamp":"2023-11-15T13:35:07.322058+0000","flow_id":1243155887024462,"in_iface":"S5","event_type":"anomaly","src_ip":"10.1.1.4","src_port":636,"dest_ip":"10.1.2.1","dest_port":62904,"proto":"TCP","anomaly":{"type":"stream","event":"stream.3whs_synack_with_wrong_ack"}

Updated by Sascha Steinbiss over 1 year ago

Philippe Antoine wrote in #note-19:

However, it also does this for the public test file: https://github.com/bro/bro/raw/master/testing/btest/Traces/cisco-fabric-path.pcap -- so if that's an issue, this file might already help.

Wireshark finds these packets are malformed and this pcap looks crafted indeed...

Well, I can confirm that I also see this pattern in the traffic captured from an affected system (that has such a Cisco layer).

Updated by Philippe Antoine over 1 year ago

Thank you Sascha

Not really an improvement for us if drops are replaced by invalid packets if they are both not used for detection...

Are you sure the dropped packets were FabricPath ?

They may have been random drops...

We very briefly ran a separate EVE output file with anomaly type enabled only

This happens with the patch in https://github.com/OISF/suricata/pull/9779 right ?

What does it look like with master ?

Do you have a way (bpf filter) to filter out this FabricPath traffic ?

Well, I can confirm that I also see this pattern in the traffic captured from an affected system (that has such a Cisco layer).

I understand that you have a pcap for experimentation.

What anomaly events if you run https://github.com/OISF/suricata/pull/9779 on such a pcap ? And with master branch ?

Updated by Philippe Antoine over 1 year ago

Do you know if it is as in https://github.com/bro/bro/raw/master/testing/btest/Traces/cisco-fabric-path.pcap that you have both encapsulated and decapsulated traffic ?

In this pcap, the fabricpoath packets are always incomplete : at lesast missing the CRC footer, but ip4+udp : missing 16 more bytes, ip4+tcp : missing 6 bytes, ip6 missing only footer...

Updated by Victor Julien over 1 year ago

- Target version changed from 7.0.3 to 8.0.0-beta1

Tracking for 8, will set backports if/when patches are available.

Updated by Sascha Steinbiss over 1 year ago

Philippe Antoine wrote in #note-22:

Thank you Sascha

Not really an improvement for us if drops are replaced by invalid packets if they are both not used for detection...

Are you sure the dropped packets were FabricPath ?

They may have been random drops...

I don't know if they were. The behaviour was (given no change in traffic):

- 6.0.13: kernel drops counter is low, ~1%, almost no packets on wrong thread

- 6.0.14 update: kernel drops rise to ~50%, along with packet on wrong thread counters (see original post)

- Downgrade to 6.0.13 again: counters return to normal

- 6.0.15 with your patch: kernel drops stay low, but packet on wrong thread and decoder invalid counters rise instead

These drops we observed in 6.0.14 an 6.0.15 without the patch might or might not be exclusively Fabric Path, but they do not seem random to me but pretty correlated with the commit ;)

Any packet that may not be inspected properly post-6.0.14 that was inspected before is an issue, regardless of whether it is Fabric Path or not.

We very briefly ran a separate EVE output file with anomaly type enabled only

This happens with the patch in https://github.com/OISF/suricata/pull/9779 right ?

Yes, that's what we ran.

What does it look like with master ?

So... in fact the latest master? Will see if we can actually build and use that on our sensors. Will try that next week.

Do you have a way (bpf filter) to filter out this FabricPath traffic ?

Not yet, I'll have to find out if that can be done (via ethertypes I guess)

Well, I can confirm that I also see this pattern in the traffic captured from an affected system (that has such a Cisco layer).

I understand that you have a pcap for experimentation.

What anomaly events if you run https://github.com/OISF/suricata/pull/9779 on such a pcap ? And with master branch ?

Will also try that.

Updated by Sascha Steinbiss over 1 year ago

Ah, there's one more question... Thanks Philippe for getting into the details with us!

Do you know if it is as in https://github.com/bro/bro/raw/master/testing/btest/Traces/cisco-fabric-path.pcap that you have both encapsulated and decapsulated traffic ?

Yes, in our traffic there is also a mix of both.

In this pcap, the fabricpoath packets are always incomplete : at lesast missing the CRC footer, but ip4+udp : missing 16 more bytes, ip4+tcp : missing 6 bytes, ip6 missing only footer...

Same situation in our pcap. That's why I proposed this pcap as a substitute for ours. Of course I didn't look at all packets in the pcap, but a few samples I tool showed incomplete packets (see screenshot above).

Do you think that the traffic is broken in some way or Wireshark's and Suricata's assumptions about the traffic are not matching reality? I.e. the footer is not there all the time or in all variations of the standard (is it even one?)

Updated by Philippe Antoine over 1 year ago

Do you know if it is as in https://github.com/bro/bro/raw/master/testing/btest/Traces/cisco-fabric-path.pcap that you have both encapsulated and decapsulated traffic ?

Yes, in our traffic there is also a mix of both.

But I mean is each Fabric Path packet a duplicate of a non-encapsulated one ?

I.e. the footer is not there all the time or in all variations of the standard (is it even one?)

Did not find a specification, glad if you have one beyond Wireshark decoder ;-)

Updated by Sascha Steinbiss over 1 year ago

Philippe Antoine wrote in #note-22:

Thank you Sascha

Not really an improvement for us if drops are replaced by invalid packets if they are both not used for detection...

Are you sure the dropped packets were FabricPath ?

They may have been random drops...We very briefly ran a separate EVE output file with anomaly type enabled only

This happens with the patch in https://github.com/OISF/suricata/pull/9779 right ?

What does it look like with master ?

Master (i.e. 90c17652a3ee2e7cad7e61515b389fa3b55996c2 at the time of testing) built into a Debian package and deployed on an affected machine did not change anything, here also the counters immediately start to rise.

Do you have a way (bpf filter) to filter out this FabricPath traffic ?

We filtered away ethertype 0x8903 via a GigaVUE packet broker deployed at that site and this resulted in roughly less than half of the original packets being received by Suricata, however, with all counters normal and without excessive values for drops, wrong thread or invalid packets! Removing the filter immediately re-introduced the counter movements.

We also applied an opposite filter: filtering away all traffic except the one with ethertype 0x8903. Interestingly, this kept the packet on wrong thread counter low but immediately caused invalid packets to rise. It almost seems like the wrong thread counter only rises when both traffic types are seen on the line.

I'd say this is a new finding. Maybe it should make sense to look further into duplicated traffic with and without the Cisco layer. Could that cause -- through a change in hashing introduced via the patch -- packets with identical 5-tuple but with a different Ethernet layer to be mapped to wrong threads?

I am curious -- how can the wrong thread situation even occur? Could this be an effect of the flow hashes calculated in Suricata based on the packet contents being in disagreement with the assignment made by the cluster_qm mechanism that only takes into account the RSS queue the NIC slots the flow into? We also tested switching the cluster_mode on an affected machine to cluster_flow, which should IIRC only take Suricata's own calculations into account, and noticed that in that case we also get wrong thread errors. So, how can that be? Am I missing some insight into how the thread mapping actually works (and have to read the code? :P)

Well, I can confirm that I also see this pattern in the traffic captured from an affected system (that has such a Cisco layer).

I understand that you have a pcap for experimentation.

What anomaly events if you run https://github.com/OISF/suricata/pull/9779 on such a pcap ? And with master branch ?

I built these two versions locally (master based on 9c3ab36af) and ran the pcap against them with the same configuration as on the sensor. The result is interesting though:

master w/o 9779 patch:

$ jq .anomaly.event < anomaly.json | sort | uniq -c |sort -rn

8820 "stream.pkt_spurious_retransmission"

7889 "stream.pkt_invalid_ack"

7513 "stream.est_invalid_ack"

834 "stream.fin_but_no_session"

781 "stream.3whs_synack_with_wrong_ack"

317 "stream.pkt_invalid_timestamp"

207 "stream.3whs_wrong_seq_wrong_ack"

196 "stream.rst_but_no_session"

133 "stream.rst_invalid_ack"

119 "stream.closewait_fin_out_of_window"

116 "stream.fin_invalid_ack"

113 "stream.3whs_synack_resend_with_diff_ack"

63 "stream.closewait_ack_out_of_window"

52 "stream.closewait_invalid_ack"

50 "stream.fin1_invalid_ack"

48 "stream.est_pkt_before_last_ack"

34 "stream.timewait_ack_wrong_seq"

26 "stream.fin1_fin_wrong_seq"

20 "stream.pkt_broken_ack"

17 "stream.reassembly_seq_gap"

17 "APPLAYER_WRONG_DIRECTION_FIRST_DATA"

13 "stream.lastack_invalid_ack"

10 "stream.fin2_invalid_ack"

7 "stream.pkt_retransmission"

6 "stream.fin2_fin_wrong_seq"

5 "stream.closewait_pkt_before_last_ack"

5 "APPLAYER_DETECT_PROTOCOL_ONLY_ONE_DIRECTION"

4 "stream.est_packet_out_of_window"

2 "stream.fin_out_of_window"

master with 9779 patch:

$ jq .anomaly.event < anomaly.json | sort | uniq -c |sort -rn

355860 "decoder.ipv4.trunc_pkt"

3725 "stream.pkt_invalid_timestamp"

2832 "stream.pkt_spurious_retransmission"

667 "stream.fin_but_no_session"

455 "stream.3whs_synack_with_wrong_ack"

203 "stream.rst_but_no_session"

99 "stream.rst_invalid_ack"

99 "stream.pkt_invalid_ack"

26 "decoder.ipv6.trunc_pkt"

20 "stream.pkt_broken_ack"

Here the patched version (which is literally just the master with the small patch on top) seems to mirror the anomaly type distribution we have seen in production while the current master does not? This is different from our tests on the live machine where we tested the recent master back then (90c17652a3ee) which did not show any differences. Maybe we should retry with the latest? Very strange.

Updated by Philippe Antoine over 1 year ago

Also Zeek does not know od a footer cf https://github.com/zeek/zeek/blob/e15d68a15c554030657b7af39f01b95bf9d4ee43/src/packet_analysis/protocol/ethernet/Ethernet.cc#L23

Updated by Philippe Antoine over 1 year ago

So, trying to sum up :

- master with whole traffic: drops, wrong thread

- master without ethertype 0x8903 : ok

- master with only ethertype 0x8903 : what are the invalid packets ? like stream.pkt_spurious_retransmission ?

Is the problem that cluster_qm does not take into account FabricPath and sends the packet on a wrong thread, and this leads to drops ?

Updated by Philippe Antoine over 1 year ago

- Status changed from In Review to Feedback

Updated by Philippe Antoine about 1 year ago

- Status changed from Feedback to Closed

Closing as Suricata seems to work as expected (and as Zeek)

Problem needs to be handled at the packet broker level, and not at Suricata.

Sascha, feel free to reopen if you have further elements...

Updated by Victor Julien about 1 year ago

- Related to Optimization #2725: stream/packet on wrong thread added

Updated by Victor Julien 3 days ago

- Assignee deleted (

Philippe Antoine) - Target version deleted (

8.0.0-beta1)